Selective State Space Models Achieve Transformer Quality at Linear Cost

Recent research from UC Berkeley introduces selective state space models (S6), a novel sequence modeling technique that achieves comparable performance to Transformers while scaling linearly in sequence length.

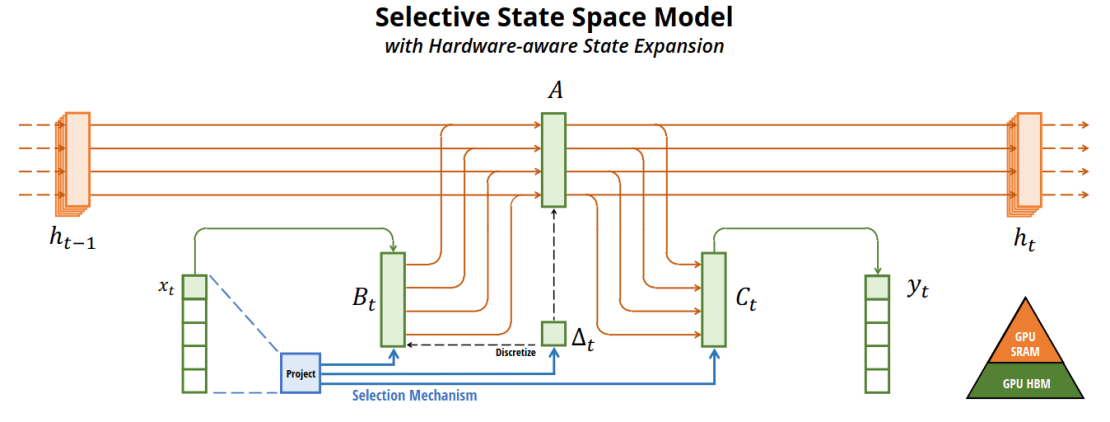

State space models (SSMs) are a class of recurrent neural networks that have shown promise for sequence tasks but struggled on dense modalities like language compared to Transformers. The key insight is augmenting SSMs with a selection mechanism that lets the model selectively propagate or ignore inputs based on content. This provides SSMs the context-aware reasoning abilities previously unique to Transformers' attention.

The resulting selective SSMs are incorporated into a simplified architecture called Mamba. On generative pretraining for text, audio, and genomics, Mamba matches or exceeds the quality of Transformers 2-4x its size. It also solves synthetic memorization tasks perfectly up to sequences of length 1 million, demonstrating strong long-term dependence modeling.

Compared to Transformers, Mamba enjoys 5x higher throughput during generation without a cache, and linear scaling during training. The researchers highlight its potential as an efficient general-purpose sequence model backbone for developing foundation models.

While promising, questions remain about Mamba's performance at larger scales and on complex language tasks. The technique demonstrates how augmenting efficient architectures like SSMs with core abilities of Transformers can combine their strengths. Selective state space models point toward reduced computational and data demands for training large, high-quality sequence models.